Azure OpenAI Tool Calling

Overview

You can use this Snap to provide external tools for the model to call, supplying internal data and information for the model's responses.

Transform-type Snap

Works in Ultra Tasks

Prerequisites

None.

Limitations and known issues

None.

Snap views

| View | Description | Examples of upstream and downstream Snaps |

|---|---|---|

| Input | This Snap has one document input view, typically carrying the input message for the Azure OpenAI model. |

|

| Output | This Snap has two output views. One contains the response from the LLM, the other one contains the tool call response extracted from the LLM response, which contains a json_arguments field. |

|

| Error |

Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the pipeline by choosing one of the following options from the When errors occur list under the Views tab. The available options are:

Learn more about Error handling in Pipelines. |

|

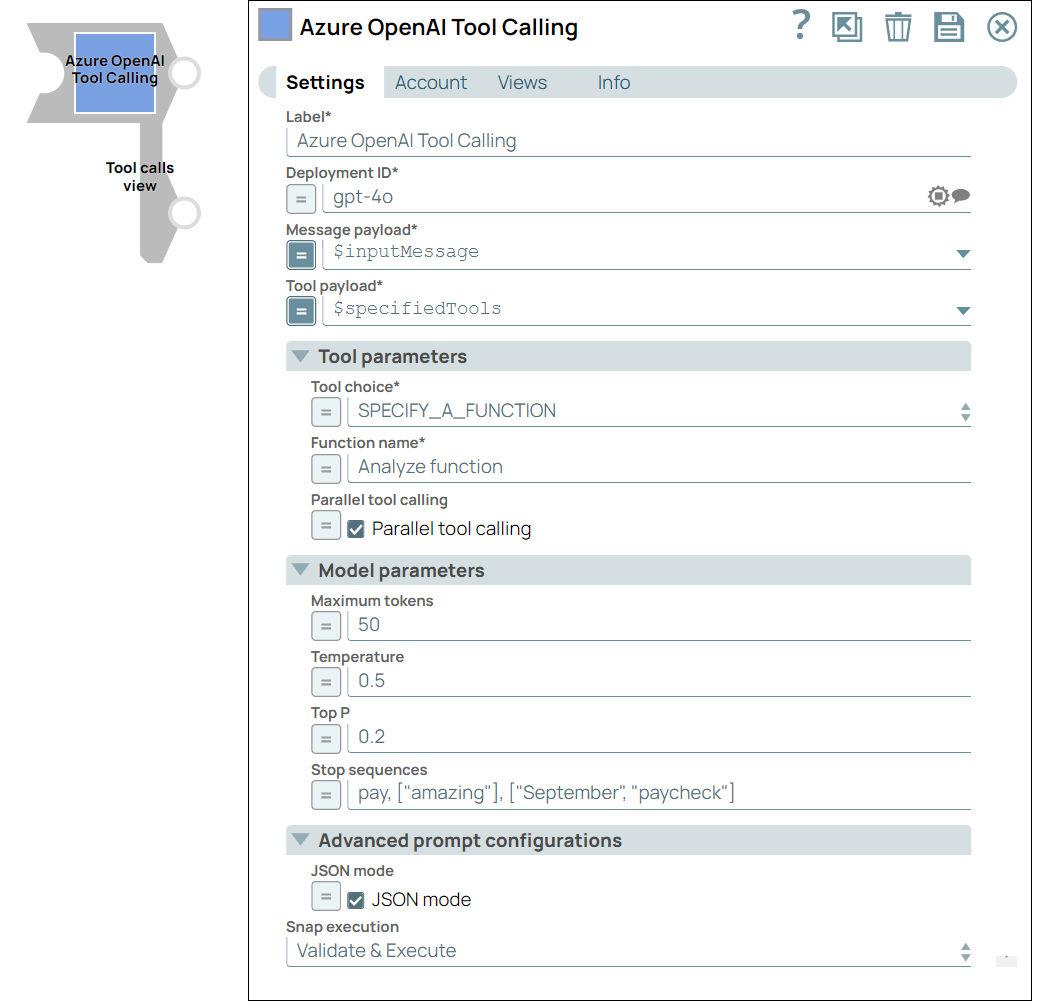

Snap settings

- Expression icon (

): Allows using JavaScript syntax to access SnapLogic Expressions to set field values dynamically (if enabled). If disabled, you can provide a static value. Learn more.

- SnapGPT (

): Generates SnapLogic Expressions based on natural language using SnapGPT. Learn more.

- Suggestion icon (

): Populates a list of values dynamically based on your Snap configuration. You can select only one attribute at a time using the icon. Type into the field if it supports a comma-separated list of values.

- Upload

: Uploads files. Learn more.

: Uploads files. Learn more.

| Field / Field set | Type | Description |

|---|---|---|

| Label | String |

Required. Specify a unique name for the Snap. Modify this to be more appropriate, especially if more than one of the same Snaps is in the pipeline. Default value: Azure OpenAI Tool Calling Example: Tool Calling |

| Deployment ID | String/Expression/ Suggestion |

Required. Specify the deployment ID to run with to receive chat completions. Default value: N/A Example: gpt-4o |

| Message payload | String/Expression |

Required. Specify the message payload that will be processed by the model. This payload typically includes input messages in JSON format. Important:

Here are the typical scenarios of how the tool calling Snap

processes different types of message lists in the input document:

Default value: N/A Example: $inputMessage |

| Tool payload | String/Expression |

Required. Specify the tool payload that defines the tools to be called along with the model. Default value: N/A Example: $specifiedTools |

| Output handling | Configure how the Snap handles the output data. | |

| Store | Checkbox/Expression |

Select this checkbox to persist the output. This is an optional setting. Default status: Deselected |

| Advanced tool configuration | Use this field set to allow users to configure advanced options for calling the Azure OpenAI tool, enabling fine-tuned control over how the tool operates within the pipeline. Each field within this set provides specific customization options to optimize the performance and results generated by the Azure OpenAI tool. | |

| Tool choice | Dropdown list/Expression |

Required. Choose the tool or function you want to call within the model. Options available are:

Default value: AUTO Example: REQUIRED |

| Function name | String/Expression |

Required. Appears when SPECIFY A FUNCTION is selected in Tool choice is expression-enabled. Provide the function name that you want to call from the model. This field is required when specifying a function.

Important: Azure OpenAI has a limit of function names of 1024 characters. Default value: None Example: Analyze sentiment |

| Parallel tool calling | Checkbox/Expression | Select this checkbox to enable parallel calls to multiple tools. Default status: Selected |

| Disable parallel tool calling parameter | Checkbox | Select this checkbox to remove the parallel tool calling parameter in the

request body. Deselect this checkbox for OpenAI o-series models. Default status: Deselected |

| Model parameters | Configure the parameters to tune the model runtime. | |

| Reasoning effort | Dropdown list | Choose the reasoning effort level for the selected model. This Snap supports

only OpenAI o-series models. The available options are:

Default value: disabled Example: low |

| Maximum tokens | Integer/Expression |

Specify the maximum number of tokens to generate in the chat completion. If left blank, the default value of the endpoint is used. Default value: N/A Example: 50 |

| Temperature | Decimal/Expression |

Specify the sampling temperature to use a decimal value between 0 and 1. If left blank, the default value of the endpoint is used. Default value: N/A Example: 0.2 |

| Top P | Decimal/Expression |

Specify the nucleus sampling value, a decimal value between 0 and 1. If left blank, the default value of the endpoint is used. Default value: N/A Example: 0.2 |

| Stop sequences | String/Expression |

Specify a sequence of texts or tokens to stop the model from generating further output. Learn more. Note:

Default value: N/A Example: pay, ["amazing"], ["September", "paycheck"] |

| Advanced prompt configuration | Configure the prompt settings to guide the model responses and optimize output processing. | |

| Structured outputs | String/Expression |

Enter the schema or expression to ensure that the model always returns outputs that match your defined JSON Schema. Default value: N/A Example: $response_format.json_schema Learn more about how to use the Structured Outputs capability. |

| JSON mode | Checkbox/Expression |

Select this checkbox to enable the model to generate strings that can be parsed into valid JSON objects. The output includes the Default status: Deselected |

| Snap execution | Dropdown list | Choose one of the three modes in which the Snap executes. Available options

are:

|

Troubleshooting

Duplicate tool names found

Duplicate tool names cause conflicts; the system automatically renames duplicates to resolve conflicts.

Provide unique names for each tool to avoid automatic renaming.