AgentCreator

SnapLogic AgentCreator enables enterprises to build LLM-powered agents, assistants, and applications that augment human capabilities and integrate AI into data pipelines. By combining dynamic iteration with real-time generative decision-making, SnapLogic’s AgentCreator enables you to design, deploy, and scale AI agents that streamline complex workflows and unlock new business opportunities—all within a single, secure platform. Use this no-code solution to accelerate and automate your business workflows.

SnapLogic Implementation of GenAI

Designer

AgentCreator resides in SnapLogic Designer. AgentCreator’s features and functionalities are embedded in the SnapLogic paradigm of building pipelines from Snaps. Designer is the same UI you use to build application and data integrations at the core component layer of Snaps. AgentCreator consists of a number of Snap Packs with LLM-based functionalities as well as Snaps that provide core functionality to building agents, assistants, and apps.

Snaps

Snaps are grouped into Snap Packs. In SnapLogic you use the Snaps to connect to 3rd-party API endpoints. AgentCreator consists of Snap Packs that connect to prominent LLMs Claude, Open AI, Azure OpenAI, and Google Gemini. Each Snap Pack consists of Snaps that offer a set of capabilities. AgentCreator also provides connections to vector database endpoints and Snaps to facilitate your generative AI and agentic operations.

Pipelines

Pipelines are the engine that power your generative AI application and agentic processes. You can orchestrate a dynamic sequence of pipeline runs to accomplish a complex task, then deploy it into a production environment as a Task. Pipelines also use JSON as the internal document format, giving an easy way to set up the flow of data across multiple systems.

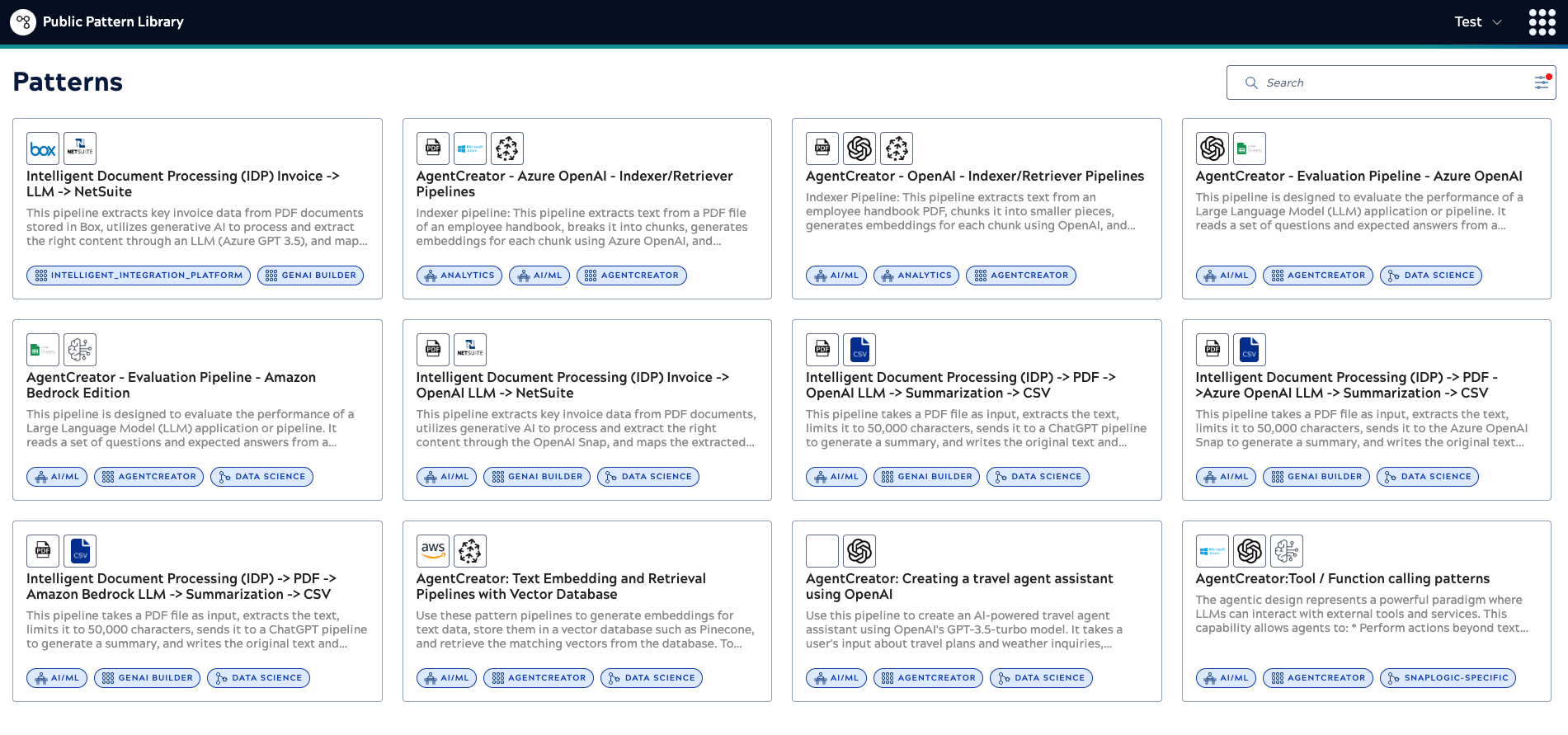

Patterns

Patterns are canonical pipeline designs that you can repurpose easily in your environment. The SnapLogic Public Pattern Library includes the following AgentCreator pipeline patterns.

You can filter for these patterns by selecting AgentCreator and GenAI Builder as the search criteria.

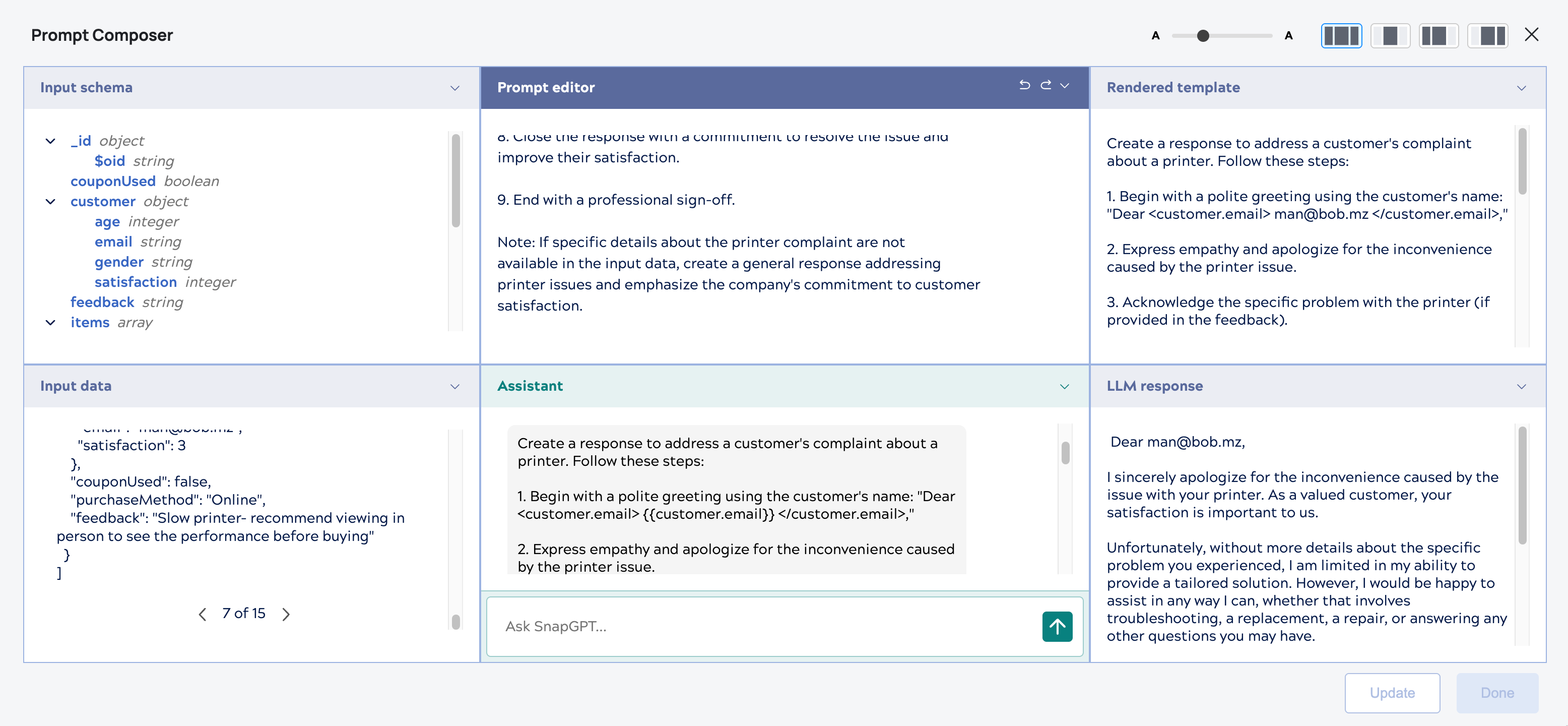

Prompt Composer

Prompt Composer is the console for the development of prompts. You can refine prompts and view the LLM responses in one UI with minimal context switching. Prompt Composer also provides assistance with SnapGPT for Prompts.

Agents

Refer to the following articles for building Agents in AgentCreator:

Supported Capabilities

AgentCreator supports a series of LLM capabilities across different vendors and models. SnapLogic adds to this with a number of key core components.

Text Embedding

Text embedding provides a vector (numeric representation) of the input data based on the embedding model and method. This supports a wide variety of output use cases, such as semantic search.

- Amazon Titan Embedder

- Azure OpenAI Embedder

- OpenAI Embedder

- Google Gemini Embedder

- Google VertexAI Embedder

Vector database operations

Text Generation

Text generation takes any type of input and generating text-based output based on an input prompt. Specifically, text-only input includes uses of tasks such as summary of input data. This might be from a question-and-answer dialog in a large input context window. Or, the summary could be a RAG completion that leverages embedded vector content to be added to the input context window. This might even require parsing of PDF content using some other systems.

In general, the prompt generator Snaps support an advanced plain text mapping system via mustache templates. These be able to be fed into any Text Generation Snap either via the messages format or a singular user prompt to send the input data to the LLM API in order to get the generated response.

Structured-Output Text Generation

Text generation capabilities suit chat-bot use cases. After all, they push the generated output directly without any need for modification. With integration use cases, structured output is needed to proliferate through various systems. Therefore, most of Text Generation Snaps support JSON mode which provides output in a structured and accessible manner. Otherwise, you would have to parse the JSON manually in the response can be complicated because of excess data in the generated response. This benefits building agents, because most GenAI API endpoints are intended for conversational chat rather than integration.

Multimodal Input Text Generation

- While OpenAI and Azure OpenAI only support certain image input types.

- SnapLogic supports multimodal processing of PDFs into images and potentially extracting the text directly.

- Amazon Bedrock-based models might support Document or Image input.

- Google Gemini supports all multi-modal input but limits input file size. However, models typically support upload via Google storage for variable sized inputs. Support of multi-modal input allows the models to be able to decipher items from visual and audio inputs.

Managed Conversation Text Generation

Align with OpenAI Assistants or the Amazon Bedrock Agents which support conversational thread within their own applications and might have different ways to support managed RAG. The workflow requires you to create the configurations of managed conversation tools (Agents for Bedrock, Assistants for OpenAI/Azure OpenAI) in their respective consoles before you can select the managed conversation tool instance from the suggest field in the corresponding Snap.

Managed RAG

One of the benefits of the Managed Conversation Text Generation is the support for managed tools. For both Amazon Bedrock Agents and OpenAI Assistants, the managed vector store can either automatically add context to a chat conversation or can provide the output of a vector query. This improves the ability to add new files to a vector store automatically without having to chunk or embed the data yourself. Instead, you allow another system to manage it automatically. These systems can also manage a full conversation or provide a blank slate for every request. Our support for OpenAI and Azure OpenAI extends to the management of Vector Stores (and file uploading) to improve the contextual awareness of future queries.

Tool Calling with Text Generation

We leverage the Tool Calling Snaps in the individual Snap Packs, where the pipelines can hold the entire conversational history from the system prompt to all user and assistant messages. This functionality enables more ability to debug specific individual operations performed for each of the iterations of the runtime.

Advanced Document Parsing

This includes PDF and unstructured data. While you can send full documents to LLMs, you might need support for full document parsing or parse documents not supported internally by SnapLogic, such as Word Documents, PowerPoint, Rich Text Format files.