Use Case: Iris flower classification

Overview

This use case demonstrates the application of machine learning in taxonomy, specifically classifying species of Iris flowers using a neural network model deployed in SnapLogic.

Problem Scenario

Taxonomy is the science of classifying organisms, including plants and animals. In 1936, R.A. Fisher published a paper introducing a dataset of measurements from Iris flowers that could be used to distinguish flower species based on sepal and petal dimensions. This dataset remains popular for classification studies in machine learning.

Description

The Iris dataset includes four features—sepal length, sepal width, petal length, and petal width—and three classes representing species: Iris setosa, Iris versicolor, and Iris virginica. We will build a simple neural network model to classify these species and then host it as an API within the SnapLogic platform.

Objectives

- Model Building: Use the Remote Python Script Snap to train a neural network on the Iris flower dataset.

- Model Testing: Test the model with sample data.

- Model Hosting: Deploy the model as an API within SnapLogic using the Remote Python Script Snap and Ultra Task.

- API Testing: Use the REST Post Snap to send requests to the Ultra Task and validate API functionality.

Model building

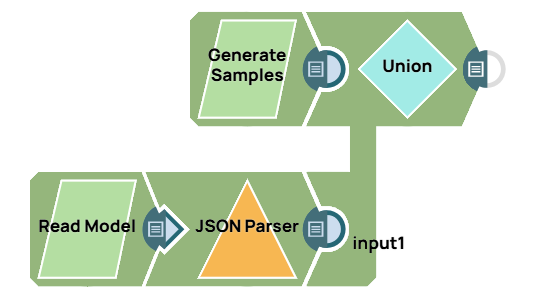

This pipeline uses the Remote Python Script Snap to train a neural network on 100 samples from the Iris dataset. The model output includes a target encoder, mapping class names to numeric values, and the serialized model, saved as JSON using the JSON Formatter and File Writer Snaps.

- snaplogic_init: Initializes the session and dependencies.

- snaplogic_process: Processes each record, extracting features and targets.

- snaplogic_final: Converts data to numpy arrays, trains the model, and serializes outputs.

from snaplogic.tool import SLTool as slt

slt.ensure("scikit-learn", "0.20.0")

slt.ensure("keras", "2.2.4")

slt.ensure("tensorflow", "1.5.0")

import base64, numpy, tensorflow, keras, sklearn.preprocessing

# Initialize data storage

features, targets = [], []

def snaplogic_init():

keras.backend.set_session(tensorflow.Session())

def snaplogic_process(row):

features.append([row["sepal_length"], row["sepal_width"], row["petal_length"], row["petal_width"]])

targets.append(row["class"])

def snaplogic_final():

features_arr = numpy.array(features)

target_encoder = sklearn.preprocessing.LabelEncoder().fit(targets)

targets_encoded = target_encoder.transform(targets)

targets_onehot = keras.utils.to_categorical(targets_encoded)

model = keras.models.Sequential([

keras.layers.Dense(16, activation="relu", input_dim=4),

keras.layers.Dense(3, activation="softmax")

])

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

model.fit(features_arr, targets_onehot, epochs=50, batch_size=10, verbose=2)

encoded_model = base64.b64encode(model.to_json().encode("utf-8"))

target_encoder_json = slt.encode(target_encoder)

return {"target_encoder": target_encoder_json, "model": encoded_model}

Model testing

This pipeline validates the model by testing it with three sample records. The CSV Generator Snap provides sample inputs, which are processed by the Remote Python Script Snap to predict classifications.

Model hosting

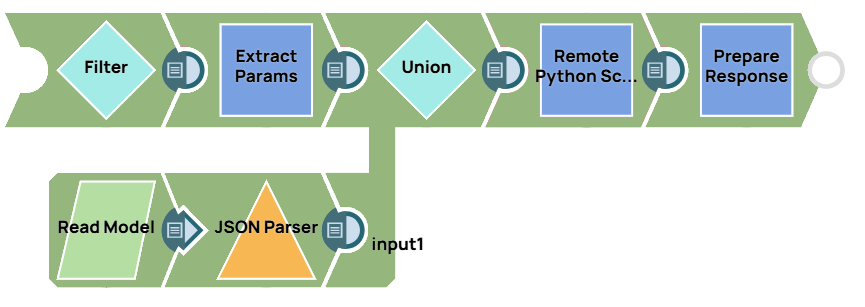

This pipeline is scheduled as an Ultra Task to provide a REST API for classification predictions. The Remote Python Script Snap loads the model, processes API requests, and outputs predictions. The Filter Snap authenticates requests, and the response Mapper Snap structures output for CORS support.

Building API

To deploy this pipeline as a REST API, click the calendar icon in the toolbar and select either Triggered Task or Ultra Task.

Triggered Task: Suitable for batch processing.

Ultra Task: Recommended for real-time API access due to low latency.

For the API URL, go to the Manager page, select Show tasks in this project in Manager from the Create Task window, and choose Details.

API testing

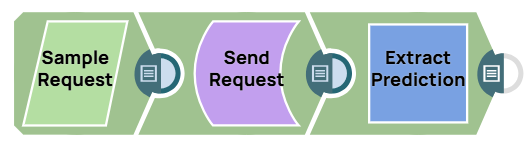

In this pipeline, a sample request is generated using the JSON Generator Snap. The request is sent to the Ultra Task by the REST Post Snap, and the Mapper Snap extracts the response.

The final output shows the predicted flower type and confidence level. For example, a request may return Iris setosa with a confidence level of 0.88.