Detect and reconcile changes

In the context of data integration and orchestration, the term schema drift describes a period when the state of the source differs from that of the target. After an initial load, during synchronization, SnapLogic AutoSync detects changes to the source schema. When possible, AutoSync propagates those changes to the destination.

The types of schema drift that AutoSync can reconcile include:

- Column name changes.

- Column additions and deletions.

- For all destinations, some data type changes.

- Column nullability changes from NOT NULL to NULLABLE: AutoSync detects this change in the source schema, and, if supported, updates the schema of the target endpoint to allow the NULL values.

- For Salesforce sources, changes to the column precision, scale, or character limit when the new value is larger than the old one.

- For Salesforce sources, the option to track deleted records.

How AutoSync reconciles schema drift depends on the load type, the type of change, and the functionality supported by the endpoints. The following sections provide more details.

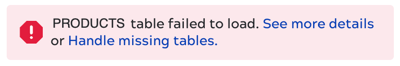

Source table deletions

When a table exists in the target, but has been removed from the source, it causes a failure on the next run of the data pipeline. The AutoSync error message provides a Handle missing tables link. Click it to open a dialog from which you can remove the deleted table from the data pipeline. The next run should be successful.

Source record deletions

With a Full load, AutoSync deletes data from the target and refreshes it from the source. Records deleted in the source since the last synchronization no longer exist in the target after a Full load.

- Periodically perform a Full load.

- Implement a workaround by adding a column to track deletes. For example, create an

isDeletedcolumn and mark as true instead of deleting the record. During Incremental or SCD2 loads, AutoSync detects the change and updates theisDeletedcolumn value. To completely delete the records, you can run a script periodically to remove them from both the source and the target.

isDeleted field. AutoSync gives you the

option to synchronize the values in

this field to the target.Column additions and deletions

After the initial load, AutoSync detects additions and deletions of columns in the source tables:

- For an added column, AutoSync adds it to the target.

- For a deleted column, AutoSync retains the column and its values in the target. If a record is later added to the source table, AutoSync adds the row, and inserts a null value in the deleted column.

Addition and deletion examples

AutoSync detects additions and deletions made to the source since the last load. You or a colleague can also change target data, but AutoSync never updates the source, synchronization is one way. Be cautious with manual changes to the target, because it can have unexpected results. The following scenarios illustrate some of these.

Scenario 1: No change in source, table, or records deleted in target, full load. A user manually removes a table from the target but doesn't remove the corresponding source object or table. The next time the data pipeline runs, AutoSync adds the table back to the target because it's still in the source. The same occurs if a user deletes the records from a table in the target but doesn't delete those records from the source. The next time the data pipeline runs, AutoSync adds the records back because they still exist in the source.

Scenario 2: No change in source, records deleted in target, incremental load. A user deletes some records from a table in the target and doesn't make any changes to the source. If the table in the target still has at least one record, on the next run AutoSync does nothing because there was no change in the source. The deleted records aren't added back to the target.

Scenario 3: Changes to source and target, incremental load. A user adds a record to a table in the source and then deletes a record from the corresponding table in the target. The table in the target still has at least one record. The next time the data pipeline runs, the record added to the source also appears in the target, but the record deleted from the target isn't added back.

Changes to data types

For all destinations, AutoSync can propagate some data type changes from the source. AutoSync does not propagate changes that would result in data loss, such as changing an integer 100 to an integer 95. If the change isn't supported, AutoSync logs a message and skips column updates.

Since destinations don't always support all source data types, AutoSync converts data to a compatible type. To do this, it first converts data types to a common model, then from the common model to the destination data type. A subset of the results of source to target mappings are documented in How AutoSync maps data. Likewise, when detecting a change in a source data type, AutoSync propagates the change using a compatible type in the destination. AutoSync uses the corresponding data type that requires the least amount of storage.

For some data types, the only compatible type is a string. For example, if a date

in the source is changed to time AutoSync changes the destination type to

string. The values of a date can't be converted to time values. In this example,

the string type is compatible with the original and the changed types and takes up

the least space.

The following table shows supported type changes for generic data types:

| Source original type | Source changed type | Notes |

|---|---|---|

| Any type | string |

|

int |

int |

The change must be to a larger size. For example, int(8) to int(32), not int(32) to int(8). |

int |

decimal |

|

float(4) |

float(8) |

|

char |

string |

|

date |

datetime |

|

date |

timestamp |

|

datetime |

timestamp |

|

float |

timestamp |

|

time |

string |

|

timestamp |

string |

|

binary |

string |

Using these generic types, you can extrapolate most results in the destination by consulting the documented endpoint-specific mappings.

However, if the changed type is not convertible to the documented type, AutoSync converts to a string, which is compatible with the original and the changed data type.

For example, the following table shows how AutoSync propagates changes to data types in SQL Server to Snowflake.

Note that FLOAT and DECIMAL in SQL Server must be converted to a string and become a VARCHAR type in the destination.

| Source original | Source changed | Destination original | Destination changed |

|---|---|---|---|

SMALLINT |

INT |

NUMBER(38, 0) |

NUMBER(38, 0) |

VARCHAR(50) |

NVARCHAR(100) |

VARCHAR(50) |

VARCHAR(100) |

DATE |

DATETIME |

DATE |

TIMESTAMP_NTZ |

BIGINT |

FLOAT |

NUMBER(38, 0) |

VARCHAR(100) |

FLOAT |

DECIMAL(8, 4) |

FLOAT |

VARCHAR(MAX) |

REAL |

NUMERIC(10, 5) |

FLOAT |

VARCHAR(MAX) |

TEXT |

NVARCHAR(MAX) |

VARCHAR(100) |

VARCHAR(MAX) |

TIME(3) |

TIME |

TIME |

TIME |

Salesforce option to include deleted records

Salesforce objects have an isDeleted field that indicates whether a record has been moved to the recycle bin for deletion.

Eventually, Salesforce empties the recycle bin and permanently deletes the records.

You might want to have records that have been marked for deletion loaded to the target to keep a history of deletions.

To add records marked for deletion to the target, AutoSync configuration gives you the option to Include deleted records:

- If unchecked, AutoSync only loads records with a value of

Falsein theisDeletedfield. - If checked, AutoSync loads all records, including those with a value of

Truein theisDeletedfield.

The following table summarizes the behavior of the Include deleted records option:

| State of the Include deleted records option | First full load | After synchronization |

|---|---|---|

| Unchecked | No deleted records loaded to target | No deleted records loaded to target |

| Checked | Deleted records loaded to target | Records deleted since last load loaded to target |

| Checked and run at least once, then unchecked | Deleted records loaded to target | And after unchecking the option, records deleted since last load are not loaded to target |

| Unchecked and run at least once, then checked | No deleted records loaded to target | And after checking the option, records deleted since last load are loaded to target |

Learn more about Salesforce connection configuration.

Changes to column scale, precision, or character limit in Salesforce

The following Salesforce usage is prerequisite to understanding AutoSync reconciliation:

- Precision is the total digits allowed in a numeric field.

- Scale is the number of digits to the right of the decimal point in a numeric field.

- Text fields have a character limit and Salesforce uses 3 bytes to store each character. The corresponding character limit in the target will be 3 times the Salesforce limit.

Data pipelines with Salesforce as a source and Amazon Redshift, Snowflake, or Google BigQuery as a target can reconcile increases to scale or precision for numeric fields and increases to character limits for text fields. AutoSync cannot reconcile reductions in scale, precision, or character length because it cannot transform existing data in the target to have fewer digits, fewer decimal places, or characters.

If you increase the scale, precision, or character limit of a field in Salesforce, AutoSync will modify the corresponding target column. Existing data in the target remains the same. AutoSync synchronizes new or changed data using the updated constraints.

The following examples show how AutoSync modifies the target after changes to the Salesforce constraints on text and numeric fields:

| Original field | Modified in source | AutoSync modifies target |

|---|---|---|

string 20 character limit |

string 50 character limit |

The target type that corresponds to string with a 150 character limit.

For example, in Snowflake VARCHAR(150)

|

integer 10 digit limit, no decimal |

decimal 14 digit limit, 2 decimal places |

The target type that corresponds to a numeric type with a 14 digit limit, 2 decimal places. For example, in Snowflake, number(14,2) |