Use Case: Object recognition with inception-v3

Overview

This use case demonstrates object recognition in images using a deep learning model, Inception-v3, deployed within a SnapLogic pipeline.

Problem scenario

While recognizing objects in images is simple for humans, teaching machines this task is complex. Recent advancements in Deep Convolutional Neural Networks have significantly improved the accuracy of machine-based image recognition.

Description

Inception-v3 is a Deep Convolutional Neural Network model capable of recognizing objects in images with high accuracy (78.8% top-1 and 94.4% top-5). Using the Keras library, this model can be integrated with SnapLogic pipelines for object recognition tasks.

Objectives

- Model Hosting: Use the Remote Python ScriptSnap from the ML Core Snap Pack to host the Inception-v3 model and schedule an Ultra Task to provide an API.

- API Testing: Use the REST Post Snap to send sample requests to the Ultra Task, validating API functionality.

Model hosting

This pipeline is scheduled as an Ultra Task to provide a REST API accessible by external applications. The Remote Python Script Snap downloads and hosts the Inception-v3 model, using it to recognize objects in incoming images.

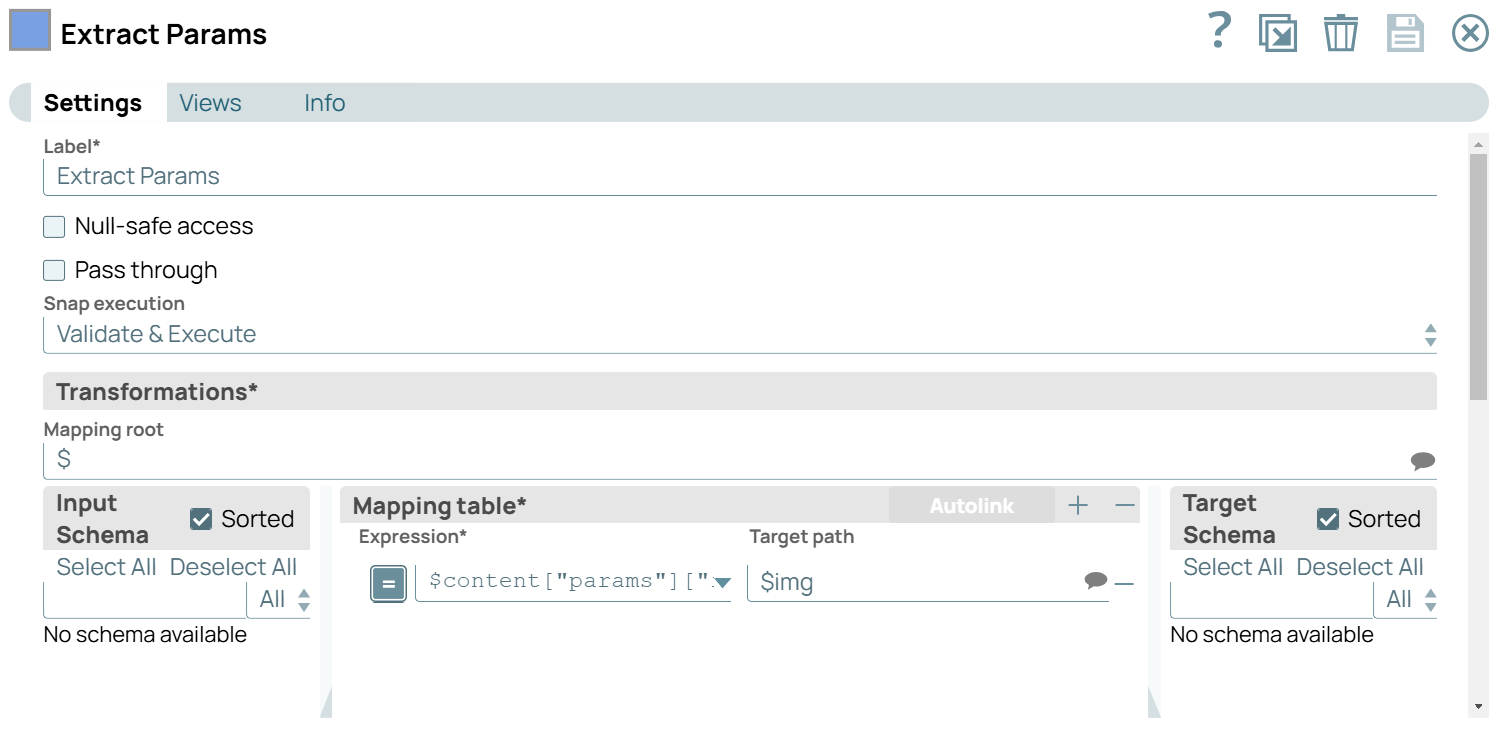

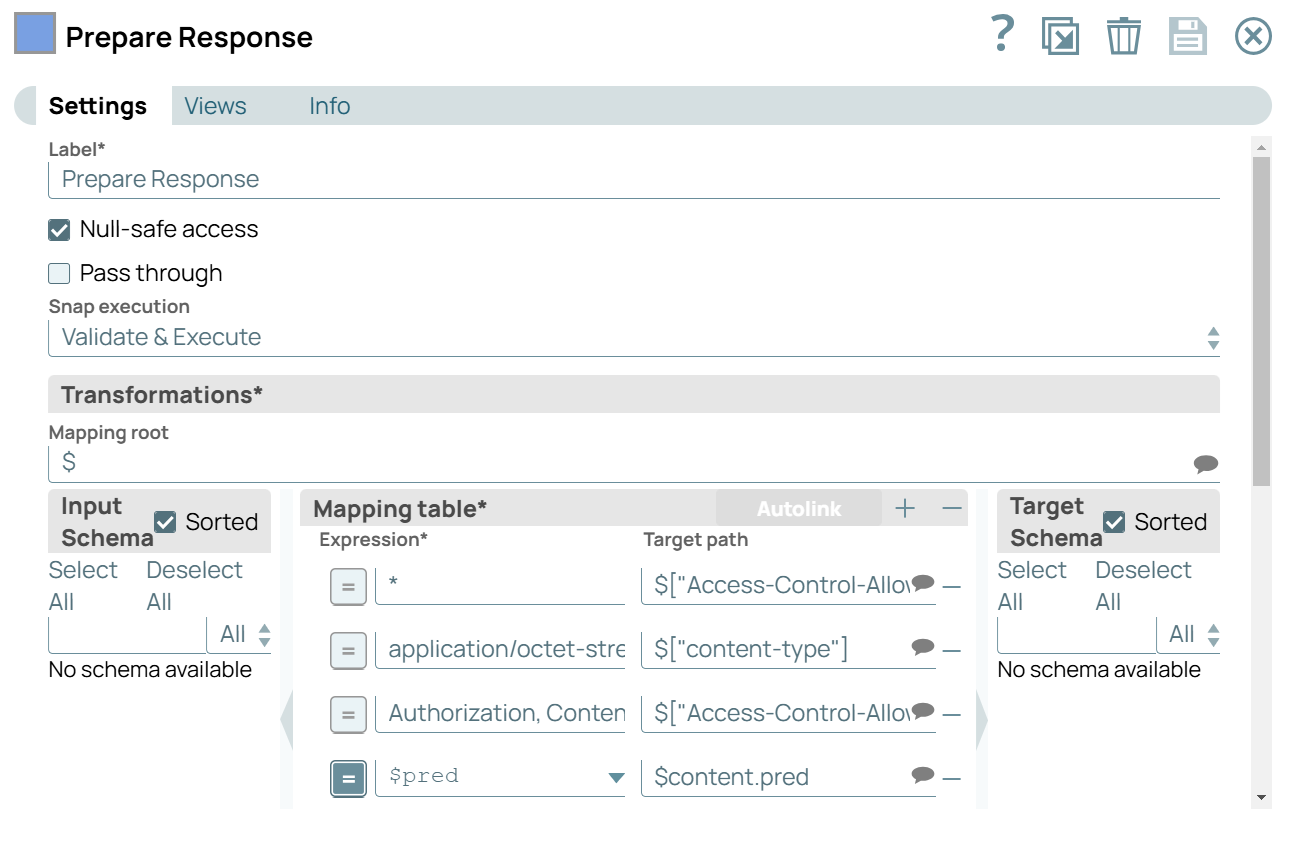

| Extract Params (Mapper) Snap | Prepare Response (Mapper) Snap |

|---|---|

|

|

- snaplogic_init: Loads the Inception-v3 model.

- snaplogic_process: Processes each incoming image, decodes it, and applies the model to generate predictions.

- snaplogic_final: Executes after all data is processed.

from snaplogic.tool import SLTool as slt

import base64, re

from io import BytesIO

import numpy

from PIL import Image

from keras.applications.inception_v3 import InceptionV3, preprocess_input, decode_predictions

# Ensure required libraries

slt.ensure("keras", "2.2.4")

slt.ensure("tensorflow", "1.5.0")

# Initialize global variables

model = None

def snaplogic_init():

global model

model = InceptionV3(weights='imagenet')

return None

def snaplogic_process(row):

try:

image_uri = row["img"]

image_base64 = re.sub("^data:image/.+;base64,", "", image_uri)

image_data = base64.b64decode(image_base64)

image = Image.open(BytesIO(image_data)).convert('RGB')

image = numpy.expand_dims(numpy.array(image, dtype=numpy.float32), axis=0)

image = preprocess_input(image)

pred = model.predict(image)

return {"pred": decode_predictions(pred, top=5)[0]}

except:

return {"pred": "Invalid request."}

def snaplogic_final():

return None

Building API

To deploy this pipeline as a REST API, click the calendar icon in the toolbar and select either Triggered Task or Ultra Task.

Triggered Task: Best for batch processing, where each request starts a new pipeline instance.

Ultra Task: Ideal for low-latency REST API access; this option is preferable for this use case.

No Bearer token is required, as authentication is handled within the pipeline. To access the API URL, click Show Tasks in this project in Manager in the Create Task window, then select Details from the task options.

API testing

In this pipeline, a sample request is generated using the JSON Generator Snap. The request is sent to the Ultra Task through the REST Post Snap, and the Mapper Snap extracts the response in $response.entity.

The JSON Generator Snap contains $token and $params that are included in the request body. The URL is specified in pipeline parameters and can be found on the Manager page. Enable Trust all certificates in the REST Post Snap if needed.

The output from the REST Post Snap shows the model’s top prediction. The last Mapper Snap extracts $response.entity, which indicates that the object in the image is likely a remote control with a confidence score of 0.986.