OpenAI Chat Completions

Overview

You can use this Snap to generate chat completions using the specified model and model parameters.

Transform-type Snap

Works in Ultra Tasks

Prerequisites

None.

Limitations

- gpt-4 and gpt-4-0613 models do not support JSON Mode. Any model released after and including gpt-4-1106-preview supports JSON Mode. Learn more.

Known issues

None.

Snap views

| View | Description | Examples of upstream and downstream Snaps |

|---|---|---|

| Input | This Snap supports a maximum of one binary or document input view. When the input type is a document, you must provide a field to specify the path to the input prompt. The Snap requires a prompt, which can be generated either by the OpenAI Prompt Generator or any user-desired prompt intended for submission to the chat completions LLM API. | Mapper |

| Output | This Snap has at the most one document output view. The Snap provides the result generated by the OpenAI LLMs. | Mapper |

| Error |

Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the pipeline by choosing one of the following options from the When errors occur list under the Views tab. The available options are:

Learn more about Error handling in Pipelines. |

|

Snap settings

- Expression icon (

): Allows using JavaScript syntax to access SnapLogic Expressions to set field values dynamically (if enabled). If disabled, you can provide a static value. Learn more.

- SnapGPT (

): Generates SnapLogic Expressions based on natural language using SnapGPT. Learn more.

- Suggestion icon (

): Populates a list of values dynamically based on your Snap configuration. You can select only one attribute at a time using the icon. Type into the field if it supports a comma-separated list of values.

- Upload

: Uploads files. Learn more.

: Uploads files. Learn more.

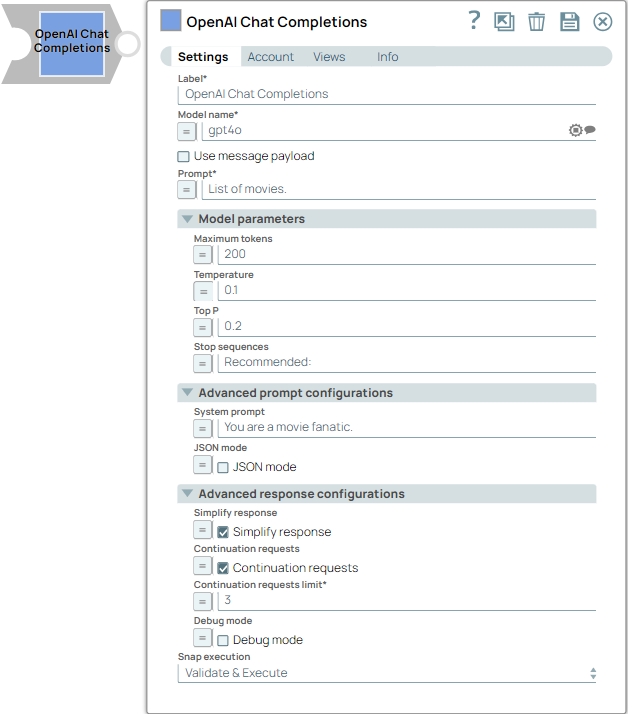

| Field / Field set | Type | Description |

|---|---|---|

| Label | String |

Required. Specify a unique name for the Snap. Modify this to be more appropriate, especially if more than one of the same Snaps is in the pipeline. Default value: OpenAI Chat Completions Example: Create customer support chatbots |

| Model name | String/Expression |

Required. Specify the model name to use for the chat completion. Learn more about the list of models from OpenAI that are compatible with the completions API. Default value: N/A Example: gpt-4 |

| Use message payload | Checkbox |

Select this checkbox to generate responses using the messages specified in the Message payload field. Note:

Default status: Deselected |

| Message payload | String/Expression |

Appears when you select the Use message payload checkbox. Required. Specify the prompt to send to the chat completions endpoint as the user message. The expected data type for this field is a list of objects (a list of messages). You can generate this list with the OpenAI Prompt Generator Snap. For example, Default value: N/A Example: $messages |

| Prompt | String/Expression |

Appears when you select Document as the Input type. Required. Specify the prompt to send to the chat completions endpoint as the user message. Default value: N/A Example: $msg |

| Output Handling | Checkbox/Expression | Select to store the output. Default value: Deselected. |

| Store | Checkbox/Expression | Select to persist the output. Default value: N/A |

| Model parameters | Configure the parameters to tune the model runtime. | |

| Reasoning effort | Dropdown list/Expression | Select the level of constraint for a reasoning model.

Enabled if the mode is a reasoning model or an expression. Default value: : medium Note: Higher-level reasoning is generally used for multi-part processing, such as advanced math solutioning, autonomous decision making, and complex planning.

On the other hand, a low reasoning effort might result in faster responses and fewer tokens used in a response. |

| Maximum tokens | Integer/Expression |

Specify the maximum number of tokens to be used to generate the chat completions result, including tokens used for reasoning. If left blank, the default value of the endpoint is used. Default value: N/A Example: 50 |

| Temperature | Decimal/Expression |

Specify the sampling temperature to use a decimal value between 0 and 1. If left blank, the default value of the endpoint is used. Default value: N/A Example: 0.2 |

| Top P | Decimal/Expression |

Specify the nucleus sampling value, a decimal value between 0 and 1. If left blank, the default value of the endpoint is used. Default value: N/A Example: 0.2 |

| Response count | Integer/Expression |

Specify the number of responses to generate for each input, where 'n' is a model parameter. If left blank, the default value of the endpoint is used. Important:

Default value: N/A Example: 2 |

| Stop sequences | String/Expression |

Specify a sequence of texts or tokens to stop the model from generating further output. Learn more. Note:

Default value: N/A Example: pay, ["amazing"], ["September", "paycheck"] |

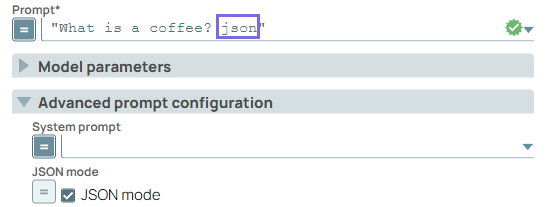

| Advanced prompt configuration | Configure the prompt settings to guide the model responses and optimize output processing. | |

| System prompt | String/Expression |

Specify the persona for the model to adopt in the responses.

This initial instruction guides the LLM's responses and actions.

Note:

Default value: N/A Example:

|

| JSON mode | Checkbox/Expression |

Select this checkbox to enable the model to generate strings that can be parsed

into valid JSON objects. The output includes the parsed JSON object in a field named json_output that contains the data.

Note:

Default status: Deselected |

| Advanced response configurations | Configure the response settings to customize the responses and optimize output processing.

Important:

|

|

| Structured outputs | String/Expression |

Enter the schema or expression to ensures that the model always returns outputs that match your defined JSON Schema. Default value: N/A Example: $response_format.json_schema Important:

Only the following models support structured outputs for JSON mode:

|

| Simplify response | Checkbox/Expression | Select this checkbox to receive a simplified response format that retains only

the most commonly used fields and standardizes the output for compatibility with

other models. This option supports only a single choice response. Here's an example

of a simplified output format.Note: This field does not support upstream values.

Default status: Deselected |

| Continuation requests | Checkbox/Expression |

Select this checkbox to enable continuation requests. When selected, the Snap automatically requests additional responses if the finish reason is Maximum tokens. Important: This Snap uses the same schema as the OpenAI response

response. However, when multiple responses are merged through

Continuation requests, certain fields may not merge

correctly, such as id, and

system_fingerprint. This is because of the structure of

the responses, where specific fields are not designed to be combined across

multiple entries.

The following example represents the format of the output when you select the

Continuation requests

checkbox:

Note: This field does not support upstream values.

Default status: Deselected |

| Continuation requests limit | Integer/Expression |

Appears when you select Continuation requests checkbox. Required. Specify the maximum number of continuation requests to be made. Note: This field does not support upstream values.

Minimum value: 1 Maximum value: 20 Default value: N/A Example: 3 |

| Debug mode | Checkbox/Expression |

Appears when you select Simplify response or Continuation requests checkbox. Select this checkbox to enable debug mode. This mode provides the raw response in the _sl_response field and is recommended for debugging purposes only. If Continuation requests is enabled, the _sl_responses field will contain an array of raw response objects from each individual request. Note: This field does not support upstream values.

Default status: Deselected |

| Snap execution | Dropdown list |

Choose one of the three modes in

which the Snap executes. Available options are:

Default value: Validate & Execute Example: Execute only |

Troubleshooting

Failure: Request encountered an error while connecting to OpenAI, status code 400

The input model do not support JSON Mode. gpt-4, gpt-4-0613, and gpt-4-0314 models do not support JSON Mode.

Provide a valid model to proceed. Any model released after gpt-4-1106-preview supports JSON Mode. Learn more.

Snap's model parameters for 'Response count' is ignored since advanced response configurations 'Simplify response' or 'Continuation requests' is enabled

The Response count value is ignored because Simplify response or Continuation requests only supports a single response.

Set the Response count value to 1 to resolve.

Continuation requests limit error.

The Continuation requests limit value is invalid.

Provide a valid value for Continuation requests limit that is between 1-20.