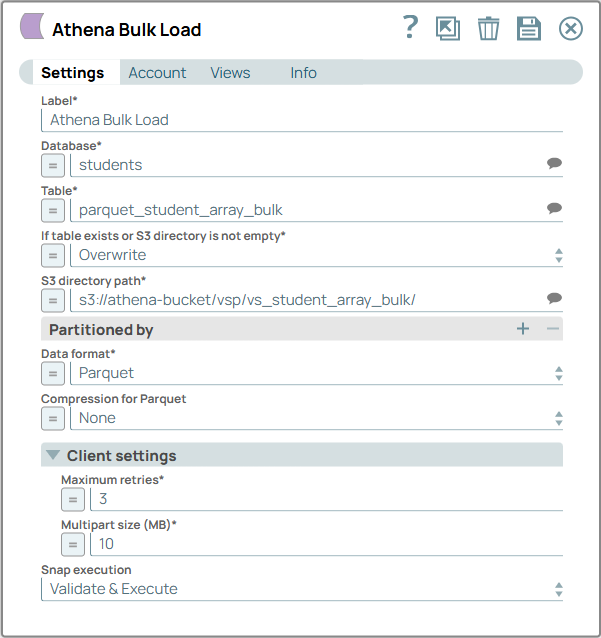

Query and load data in S3 using Athena

This example pipeline demonstrates how to query and load data using Amazon Athena Snaps.

Download this pipeline.

-

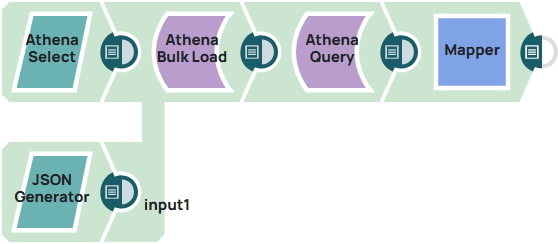

Configure the Athena Select Snap to query data

stored in Amazon S3 via Athena from the students database and

vs_student_array table. Optionally, you can choose to filter

results using Where clause, Group by, or Order by.

On validation, the Snap successfully retrieves the selected records from the specified table in S3 using Athena’s SQL query engine.

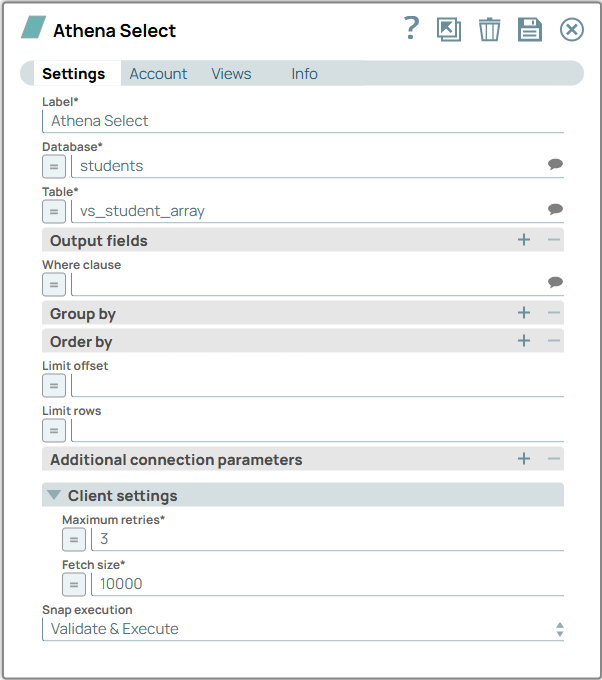

Athena Select Snap configuration Athena Select Snap output

-

Configure the JSON Generator Snap with the

id,subject, andscoredata to prepare input for the Athena Bulk Load Snap input for data ingestion into Athena database.

On validation, the Snap displays the success message of the record created and also displays the details of the customer record.[ { "id": "int", "subject": "array<string>", "score": "array<int>" } ] -

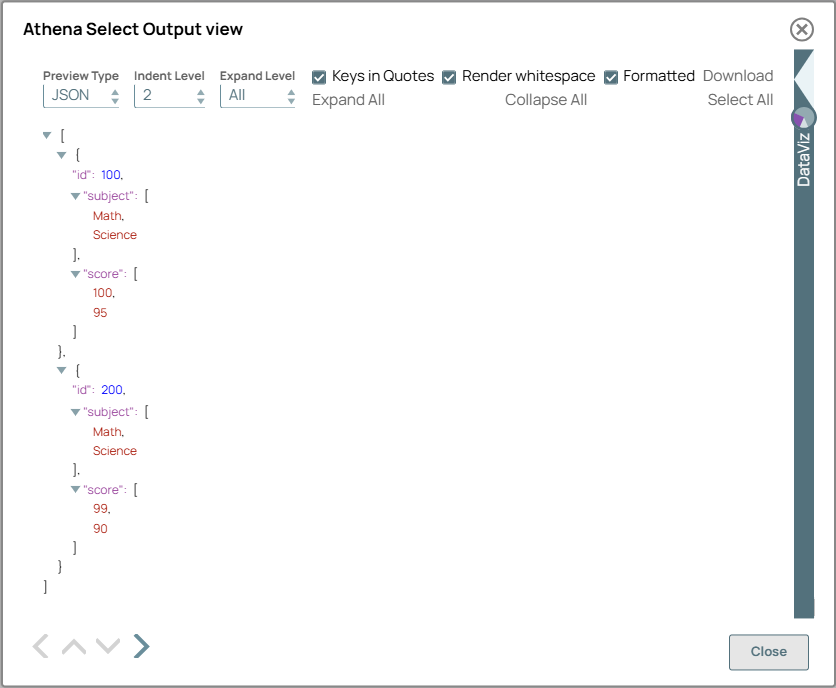

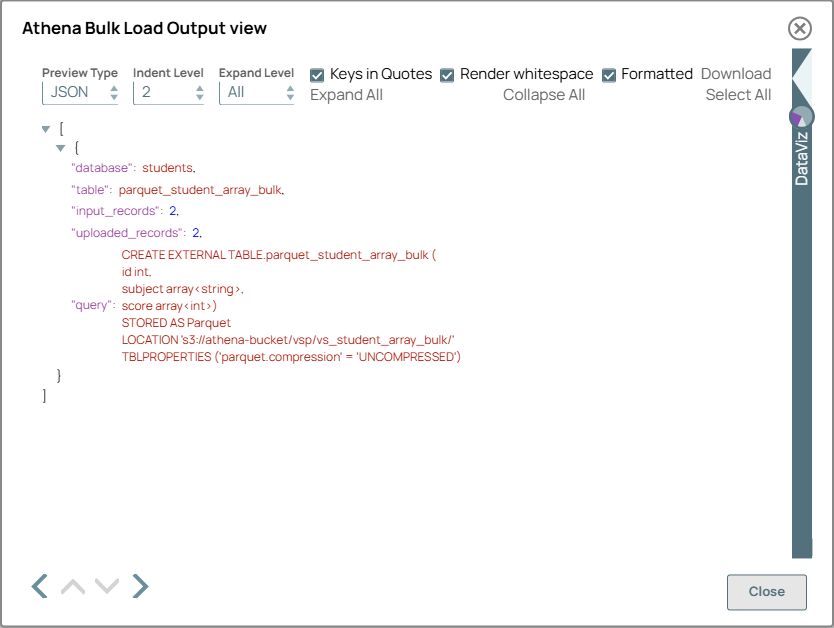

Configure the Athena Bulk Load Snap to load the

input data into the parquet_student_array_bulk table. Provide the

S3 directory path for storing the bulk-loaded data and set the data format to

Parquet.

On validation, the Snap uploads the data to the S3 directory and creates an external table in Athena using the specified parquet format.

Athena Bulk Load Snap configuration Athena Bulk Load Snap output

-

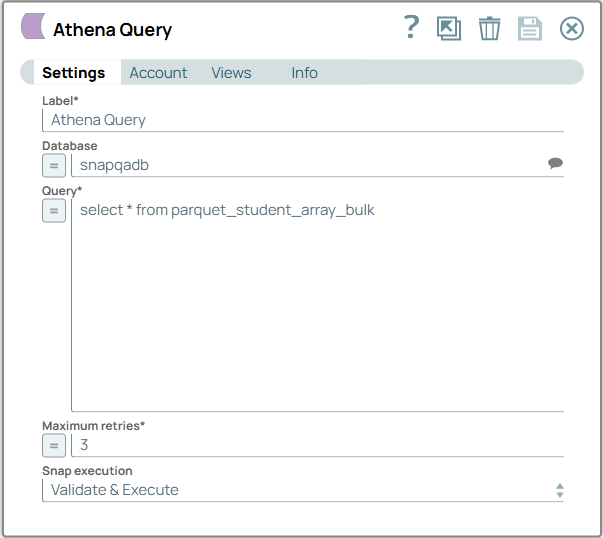

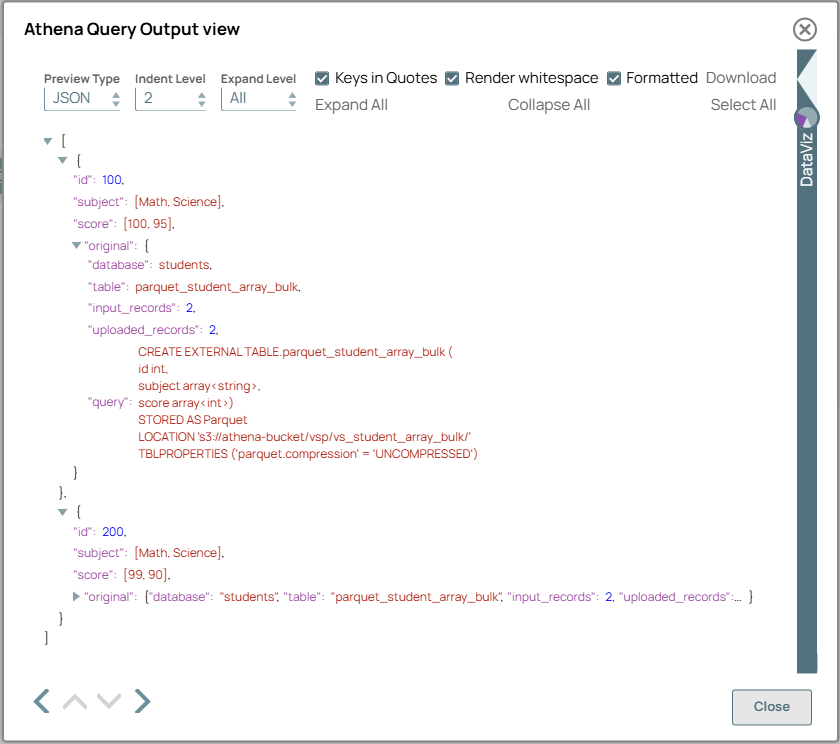

Configure the Athena Query Snap to retrieve data

from the parquet_student_array_bulk table using the SELECT

query.

On validation, the Snap successfully executes the query and returns the processed data from Athena, including all selected fields.

Athena Query Snap configuration Athena Query Snap output

-

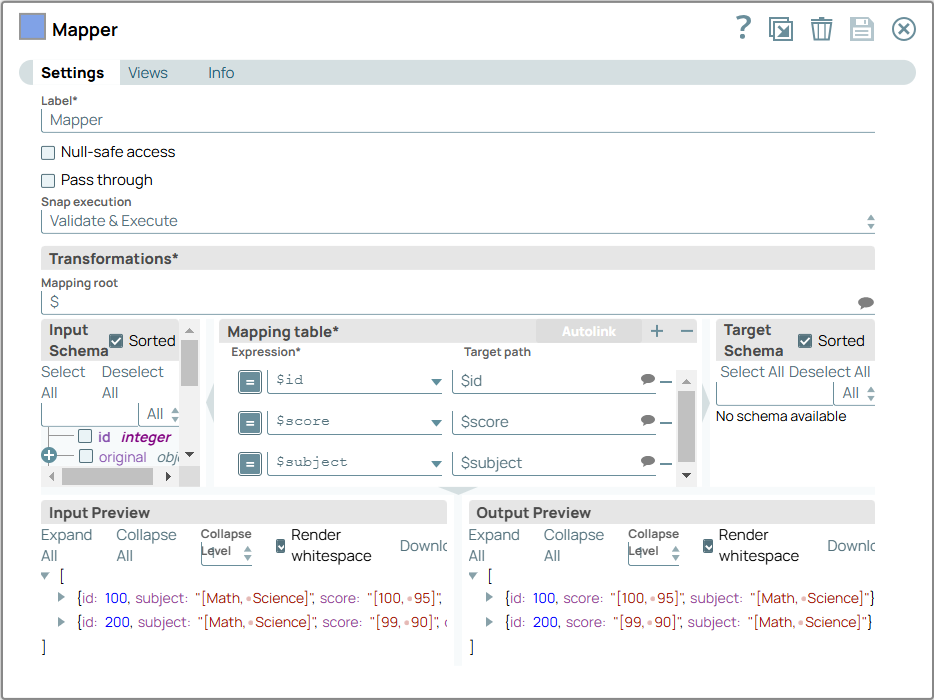

You can configure the Mapper Snap to

transform data for further use.

On validation, the Snap displays the output based on the specified mappings.

Tip:

Tip:- For copying data between Athena tables, a more efficient approach is to use File Operation Snap or S3 Copy Snap to move S3 objects directly and then use Athena Query Snap to create the target table. This avoids transferring millions of rows through the pipeline and leverages the AWS S3 network for faster data movement.

- While this example works for small datasets, Athena is designed to handle large-scale data processing, typically in terabytes (TB) or gigabytes (GB).

- Download and import the pipeline in to the SnapLogic Platform.

- Configure Snap accounts, as applicable.

- Provide pipeline parameters, as applicable.