Snowflake - Insert

Overview

You can use this Snap to execute a Snowflake SQL Insert statement with given values. Document keys will be used as the columns to insert into, and their values will be the values inserted. Missing columns from the document will have null values inserted into them.

Write-type Snap

-

Works in Ultra Tasks

Prerequisites

You must have minimum permissions on the database to execute Snowflake Snaps. To understand if you already have them, you must retrieve the current set of permissions. The following commands enable you to retrieve those permissions:

SHOW GRANTS ON DATABASE <database_name>

SHOW GRANTS ON SCHEMA <schema_name>

SHOW GRANTS TO USER <user_name>- You must enable the Snowflake account to use private preview features for creating the Iceberg table.

- External volume has to be created on the Snowflake worksheet, or Snowflake Execute snap. Learn more about creating external volume.

- Usage (DB and Schema): Privilege to use the database, role, and schema.

- Create table: Privilege to create a new table or insert data into an existing table.

grant usage on database <database_name> to role <role_name>;

grant usage on schema <database_name>.<schema_name>;

grant "CREATE TABLE" on database <database_name> to role <role_name>;

grant "CREATE TABLE" on schema <database_name>.<schema_name>;Learn more about Snowflake privileges: Access Control Privileges.

This Snap uses the INSERT command internally. It enables updating a table by inserting one or more rows into the table.

Limitations

- Snowflake does not support batch insert. As a workaround, use the Snowflake - Bulk Load Snap to insert records in batches. Though the Snowflake Insert Snap sends requests in batches, Snowflake does not support executing multiple SQL statements in a single API call. So, even if batching is enabled in a Snowflake Insert Snap, Snowflake will execute one SQL statement at a time.

- This Snap does not support creating an iceberg table with an external catalog in Snowflake as, currently, the endpoint only allows read-only access for the tables that are created using an external catalog without any write capabilities. Learn more about iceberg catalog options.

- Snowflake does not support cross-cloud and cross-region Iceberg tables when you use

Snowflake as the Iceberg catalog. If the Snap displays an error message such as

External volume <volume_name> must have a STORAGE_LOCATION defined in the local region ..., ensure that the External volume fielduses an active storage location in the same region as your Snowflake account.

Known Issues

Because of performance issues, all Snowflake Snaps now ignore the Cancel queued queries when pipeline is stopped or if it fails option for Manage Queued Queries, even when selected. Snaps behave as though the default Continue to execute queued queries when the Pipeline is stopped or if it fails option were selected.

Snap views

| View | Description | Examples of upstream and downstream Snaps |

|---|---|---|

| Input |

This Snap has one document input view by default. A second view can be added for table metadata as a document so that the table is created in Snowflake with a similar schema as the source table. This schema is usually from the second output of a database Select Snap. If the schema is from a different database, there is no guarantee that all the data types would be properly handled. |

|

| Output |

The Snap outputs one document for every record written. If an output view is available, the original document used to create the statement is written to the output with the status of the insert executed. |

|

| Error |

Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the pipeline by choosing one of the following options from the When errors occur list under the Views tab. The available options are:

Learn more about Error handling in Pipelines. |

|

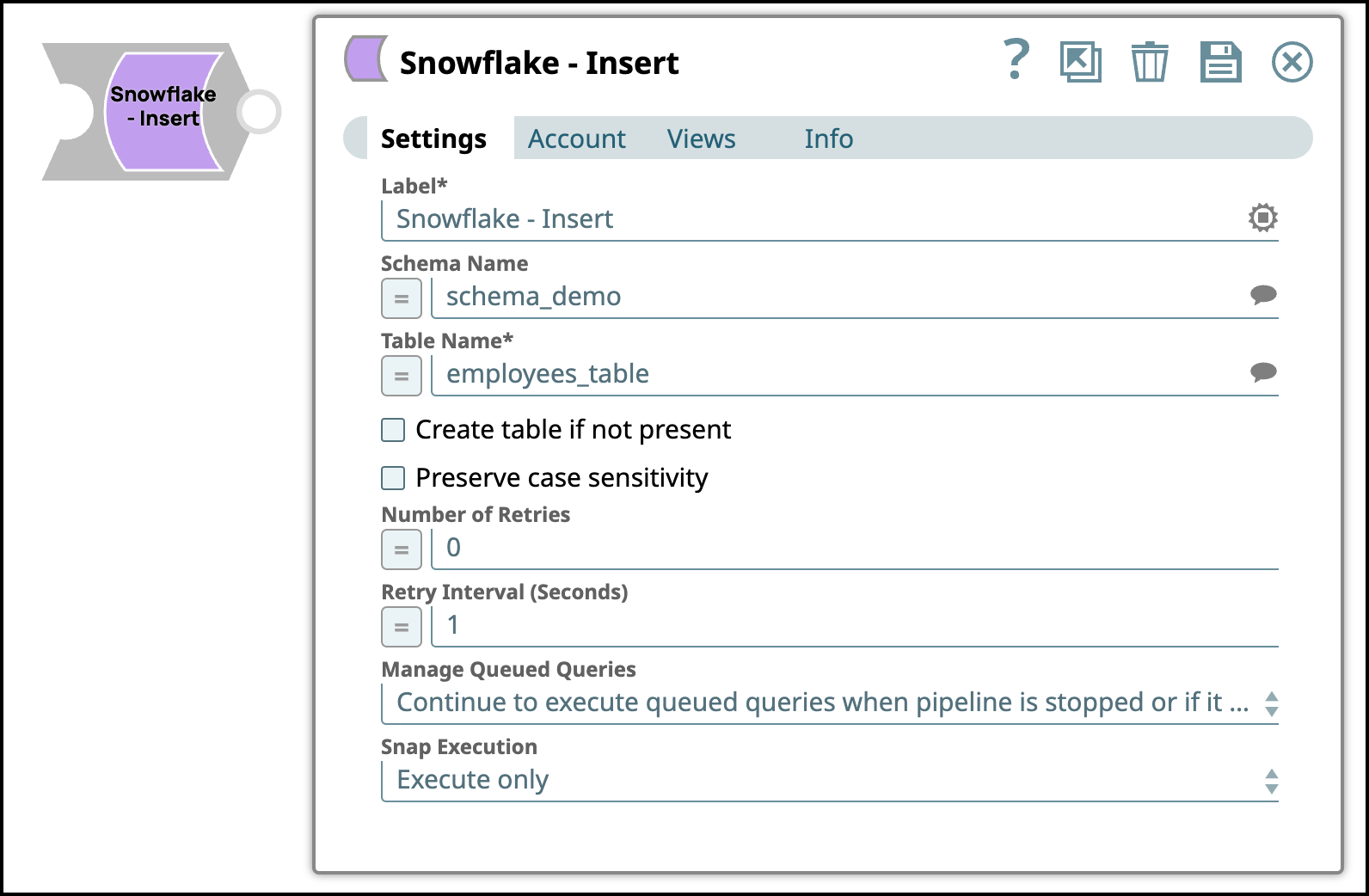

Snap settings

- Expression icon (

): Allows using JavaScript syntax to access SnapLogic Expressions to set field values dynamically (if enabled). If disabled, you can provide a static value. Learn more.

- SnapGPT (

): Generates SnapLogic Expressions based on natural language using SnapGPT. Learn more.

- Suggestion icon (

): Populates a list of values dynamically based on your Snap configuration. You can select only one attribute at a time using the icon. Type into the field if it supports a comma-separated list of values.

- Upload

: Uploads files. Learn more.

: Uploads files. Learn more.

| Field / Field set | Type | Description |

|---|---|---|

| Label | String | Required. Specify a unique name for the Snap. Modify this to be more appropriate, especially if there are more than one of the same Snap in the pipeline. |

| Schema Name | String/Expression | Required. Specify the database schema name. In case it

is not defined, then the suggestion for the Table Name retrieves all tables names of

all schemas. The property is suggestible and will retrieve available database

schemas during suggest values. Note: The values can be passed using the Pipeline

parameters but not the upstream parameter. Default value: N/A Example: Schema_demo |

| Table Name | String/Expression | Required. Specify the name of the table to execute insert-on. Note:

Default value: N/A Example: employees_table |

| Create table if not present | Checkbox | Select this checkbox to automatically create the target table if it does not

exist. Note:

Default status: deselected |

| Iceberg table | Checkbox | Appears when you select Create table if not present.

Select this checkbox to create an Iceberg table with the Snowflake catalog. Learn

more about how to create and Iceberg table with Snowflake as the

Iceberg catalog. Default status: deselected |

| External volume | String/Expression/ Suggestion | Required. Appears when you select the Iceberg

table.Specify the external volume for the Iceberg table. Learn more

about how to configure an external volume for Iceberg

tables. Default value: N/A Example: employees |

| Base location | String/Expression | Required. Appears when you select the Iceberg

table checkbox. Specify the Base location for the Iceberg table.

Note: The base location is the relative path from the external

volume. Default value: N/A Example: iceberg_s3_stage |

| Preserve case sensitivity | Checkbox |

Select this checkbox to preserve the case sensitivity of the column names.

Default status: deselected |

| Number of retries | Integer/Expression | Specify the maximum number of attempts to be made to receive a response. The

request is terminated if the attempts do not result in a response. If the value is larger than 0, the Snap first downloads the target file into a temporary local file. If any error occurs during the download, the Snap waits for the time specified in the Retry interval and attempts to download the file again from the beginning. When the download is successful, the Snap streams the data from the temporary file to the downstream Pipeline. All temporary local files are deleted when they are no longer needed. Minimum Value: 0 Note: Ensure that the

local drive has sufficient free disk space to store the temporary local

file. Default value: 0 Example: 3 |

| Retry interval (seconds) | Integer/Expression | Specifies the time interval between two successive retry requests. A retry

happens only when the previous attempt resulted in an exception. Default value: 1 Example: 10 |

| Manage Queued Queries | Dropdown list |

Default value: Select this property to determine whether the

Snap should continue or cancel the execution of the queued Snowflake Execute SQL

queries when you stop the pipeline.

Note: If you select Cancel queued queries when

the pipeline is stopped or if it fails, then the read queries under execution

are canceled, whereas the write type of queries under execution are not

canceled. Snowflake internally determines which queries are safe to be canceled

and cancels those queries. Default value: Continue to execute queued queries when the Pipeline is stopped or if it fails Example: Cancel queued queries when the pipeline is stopped or if it fails |

| Snap execution | Dropdown list |

Choose one of the three modes in

which the Snap executes. Available options are:

Default value: Execute only Example: Validate & Execute |

Instead of building multiple Snaps with interdependent DML queries, we recommend that you use the Snowflake - Multi Execute Snap.

In a scenario where the downstream Snap does depend on the data processed on an Upstream Database Bulk Load Snap, use the Script Snap to add delay for the data to be available.

For example, when performing a create, insert and delete function sequentially on a pipeline, using a Script Snap helps in creating a delay between the insert and delete function. Else, it may turn out that the delete function is triggered even before inserting the records on the table.