Bulk Load Employee data from a CSV file into a DLP instance

Consider the scenario where we need the employee data from a CSV file to be fed into a DLP instance so that we can analyze the data.

.

Prerequisites

-

Configure the Bulk Load Snap account to connect to the AWS S3 service using Source Location Credentials to read the CSV file.

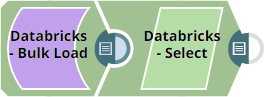

- We need two Snaps:

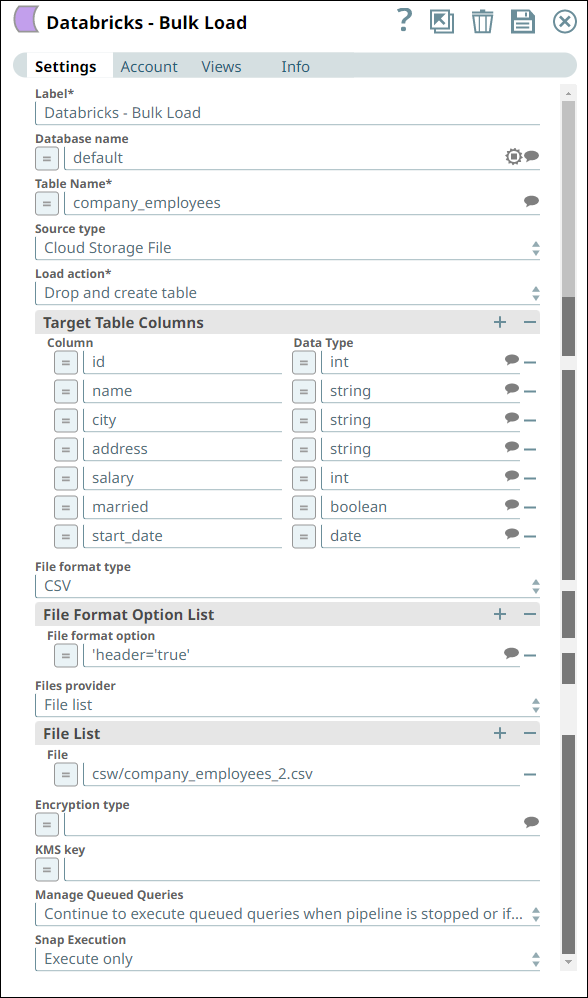

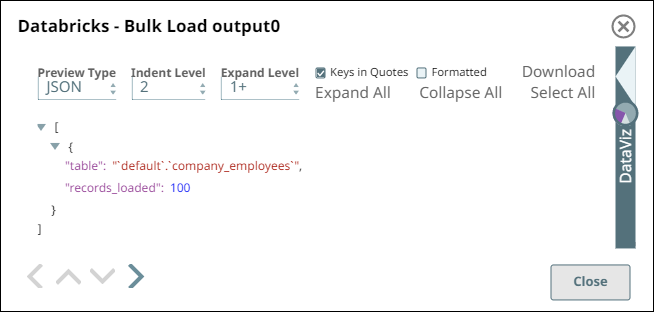

- Databricks Bulk Load: To load the data from the CSV file in an S3 location.

-

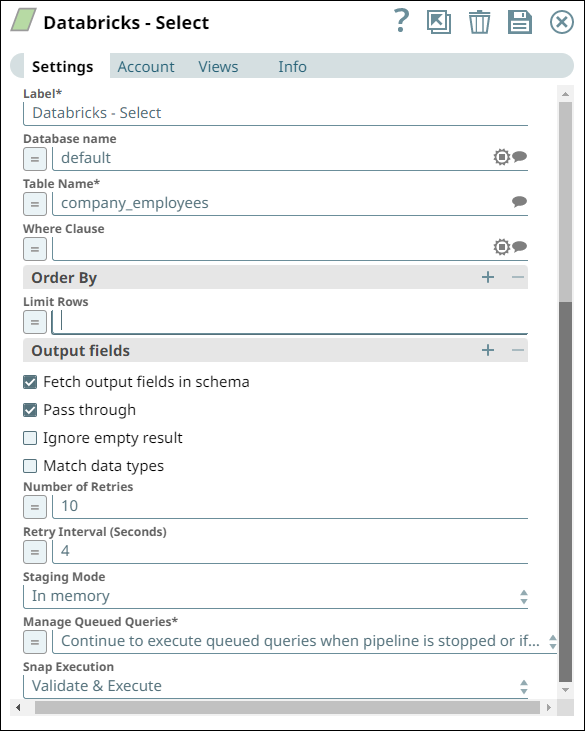

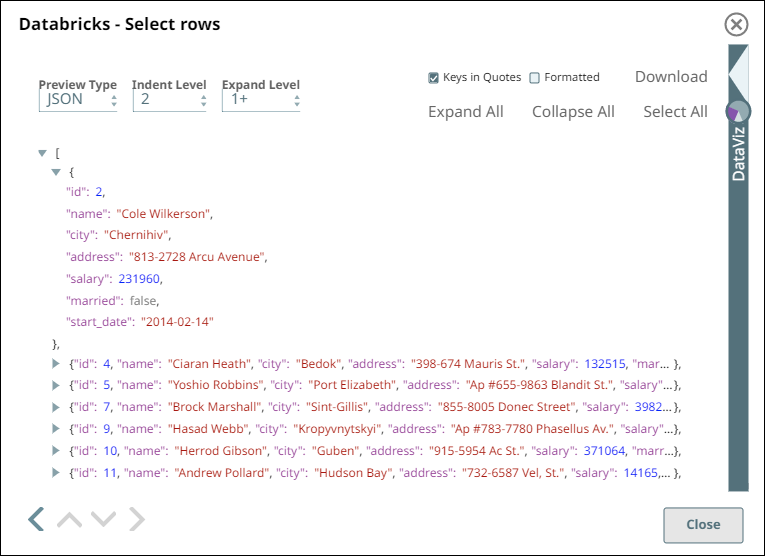

Databricks Select: To read the data loaded in the target table and generate some insights.

- Download and import the pipeline into SnapLogic.

- Configure Snap accounts as applicable.

- Provide pipeline parameters as applicable.