Databricks - Delete

Overview

You can use this Snap to execute a Databricks SQL DELETE statement based on specific conditions. Ensure adequate discretion while using this Snap as it can truncate the table if run without specifying a WHERE condition for the DELETE statement.

Write-type Snap

Does not support Ultra Tasks

Prerequisites

-

Valid access credentials to a DLP instance with adequate access permissions to perform the action in context.

-

Valid access to the external source data in one of the following: Azure Blob Storage, ADLS Gen2, DBFS, GCP, AWS S3, or another database (JDBC-compatible).

Limitations

Snaps in the Databricks Snap Pack do not support array, map, and struct data types in their input and output documents.

Known issues

When you add an input view to this Snap, ensure that you configure the Batch size as

1 in the Snap’s account configuration. For any other batch size, the Snap fails

with the exception: Multi-batch parameter values are not supported for this query

type.

Snap views

| View | Description | Examples of upstream and downstream Snaps |

|---|---|---|

| Input | A JSON document containing the reference to the table and rows to be deleted. | |

| Output | A JSON document containing the result of the delete operation on the target table. | |

| Error |

Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the pipeline by choosing one of the following options from the When errors occur list under the Views tab. The available options are:

Learn more about Error handling in Pipelines. |

|

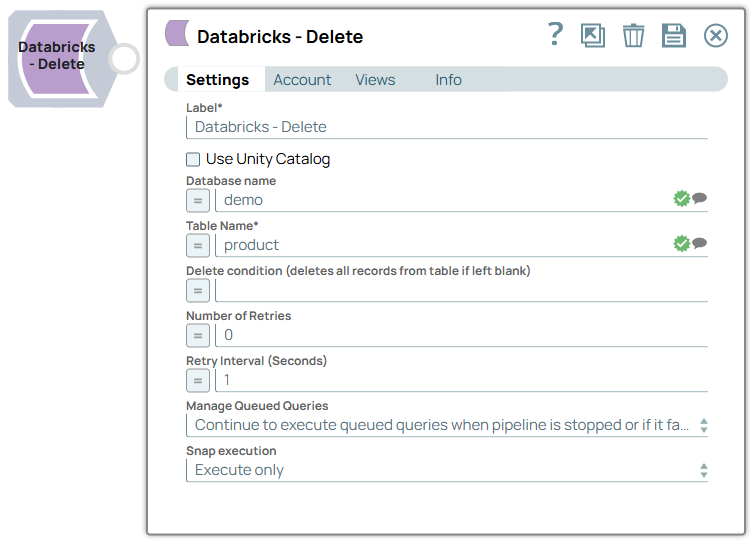

Snap settings

- Expression icon (

): Allows using pipeline parameters to set field values dynamically (if enabled). SnapLogic Expressions are not supported. If disabled, you can provide a static value.

- SnapGPT (

): Generates SnapLogic Expressions based on natural language using SnapGPT. Learn more.

- Suggestion icon (

): Populates a list of values dynamically based on your Snap configuration. You can select only one attribute at a time using the icon. Type into the field if it supports a comma-separated list of values.

- Upload

: Uploads files. Learn more.

: Uploads files. Learn more.

| Field / Field set | Type | Description |

|---|---|---|

| Label | String | Required. Specify a unique name for the Snap. Modify this to be more appropriate, especially if more than one of the same Snaps is in the pipeline. Default value: Databricks - Delete Example: Db_Del_Duplicates |

| Use unity catalog | Checkbox | Select this checkbox to use the Unity catalog to access data from the

catalog. Default status: Deselected |

| Catalog name | String/Expression/ Suggestion | Appears when you select Use unity catalog Specify the name of the catalog for using the unity catalog.Default value: hive_metastore Example: xyzcatalog |

| Database name | String/Expression/ Suggestion | Enter your corresponding DLP database name for the DELETE statement to delete

existing rows from the table. Default value: None. Example: Cust_DB |

| Table Name | String/Expression/ Suggestion | Required. Enter your table name for the DELETE statement

to delete existing rows from. Default value: None. Example: Cust_List |

| Delete condition (deletes all records from table if left blank) | String/Expression | Specify the condition for the DELETE statement to filter the rows to delete

from the target table. Note: Specify a valid WHERE clause for the delete condition.

If you leave this field blank, the Snap deletes all the records from the

table. Default value: N/A Example: last_login_date < ‘2010-01-01’> |

| Number of Retries | Integer/Expression | Specifies the maximum number of retry attempts when the Snap fails to

write. Minimum value: 0 Default value: 0 Example: 3

|

| Retry Interval (seconds) | Integer/Expression | Specifies the minimum number of seconds the Snap must wait before each retry

attempt. Minimum value: 1 Default value: 1 Example: 3 |

| Manage Queued Queries | Dropdown list | Select this property to determine whether the Snap should continue or cancel

the execution of the queued Databricks SQL queries when you stop the Pipeline. Note:

If you select Cancel queued queries when pipeline is stopped or if it

fails, then the read queries under execution are cancelled, whereas the write

type of queries under execution are not cancelled. Databricks internally

determines which queries are safe to be cancelled and cancels those

queries. Note: Due to an issue with DLP, aborting an ELT Pipeline validation

(with preview data enabled) causes only those SQL statements that retrieve data

using bind parameters to get aborted while all other static statements (that use

values instead of bind parameters) persist.

To avoid this issue, ensure that you always configure your Snap settings to use bind parameters inside its SQL queries. Default value: Continue to execute queued queries when pipeline is stopped or if it fails. Example: Cancel queued queries when pipeline is stopped or if it fails |

| Snap execution | Dropdown list |

Choose one of the three modes in

which the Snap executes. Available options are:

Default value: Execute only Example: Validate & Execute |

Troubleshooting

| Error | Reason | Resolution |

|---|---|---|

| Missing property value | You have not specified a value for the required field where this message appears. | Ensure that you specify valid values for all required fields. |