Sample

The Sample Snap is a Flow-type Snap that enables you to generate a sample dataset from the input dataset.

Overview

The Sample Snap is a Flow type Snap that enables you to generate a sample dataset from the input dataset. This sampling is carried out based on one of the following algorithms and with a predefined pass through percentage. The algorithms available are:

- Linear Split

- Streamable Sampling

- Strict Sampling

- Stratified Sampling

- Weighted Stratified Sampling

These algorithms are explained in the Snap Settings section below.

A random seed can also be provided to generate the same sample set for a given seed value. You can also optimize the Snap's usage of node memory by configuring the maximum memory in percentage that the Snap can use to buffer the input dataset. If the memory utilization is exceeded, the Snap writes the dataset into a temporary local file. This helps you avoid timeout errors when executing the pipeline.

Flow-type Snap

Works in Ultra Tasks only when Streamable Sampling is selected as the sampling algorithm.

Prerequisites

- A basic understanding of the sampling algorithms supported by the Snap is preferable.

Limitations and known issues

None.

Snap views

| View | Description | Examples of upstream and downstream Snaps |

|---|---|---|

| Input | This Snap has exactly one document input view. The input document from which the sample dataset is to be generated. The Snap accepts both numeric and categorical data; the stratified sampling and weighted stratified sampling algorithms require datasets containing categorical fields. | |

| Output | This Snap has at most two document output views:

|

|

| Error |

Error handling is a generic way to handle errors without losing data or failing the Snap execution. You can handle the errors that the Snap might encounter when running the pipeline by choosing one of the following options from the When errors occur list under the Views tab. The available options are:

Learn more about Error handling in Pipelines. |

|

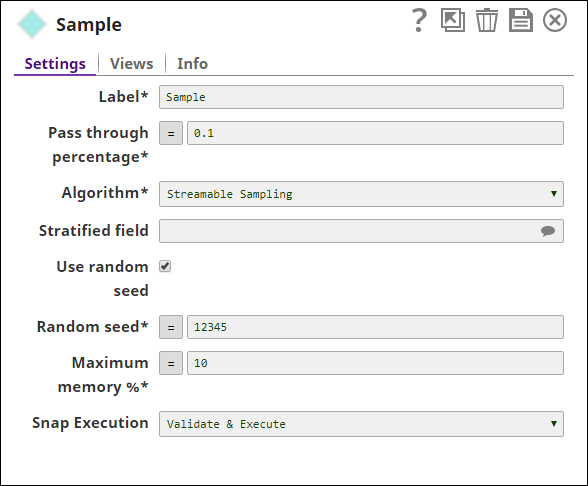

Snap settings

- Expression icon (

): JavaScript syntax to access SnapLogic Expressions to set field values dynamically (if enabled). If disabled, you can provide a static value. Learn more.

- SnapGPT (

): Generates SnapLogic Expressions based on natural language using SnapGPT. Learn more.

- Suggestion icon (

): Populates a list of values dynamically based on your Account configuration.

- Upload

: Uploads files. Learn more.

: Uploads files. Learn more.

| Field / field set | Type | Description |

|---|---|---|

| Label | String |

Required. Specify a unique name for the Snap. Modify this to be more appropriate, especially if more than one of the same Snaps is in the pipeline. Default value: Sample Example: Sampling |

| Pass through percentage | String/Expression | Required. The number of records, as a percentage, that are to be passed through to the output. This value is treated differently based on the algorithm selected. Default value: 0.5 Note:

The number of records output by the Snap is determined by the pass through percentage as well as the total number of records present. If there are 100 records and the pass through percentage is 0.5 then 50 records are expected to be passed through. If there are 103 records and the pass through percentage is 0.5 then only 51 records are expected to be passed through. This varies further if the algorithm is stratified or weighted stratified, in those cases, the number of records per class is also factored. |

| Algorithm | Dropdown list | Required. . The sampling algorithm to be used. Choose from one of the following options in the drop-down menu:

Default value: Streamable Sampling If Stratified Sampling or Weighted Stratified Sampling is selected, the Stratified field property must also be configured. |

| Stratified field | String/Expression/Suggestion | The field in the dataset containing classification information pertaining to the data. Select the field that is to be treated as the stratified field and the sampling is done based on this field. Default value: None Example: Consider an employee record dataset containing fields such as Name, ID, Position, and Location. The fields Position, and Location help you classify the data, so the input in this property for this case is$Positionor$Location. |

| Use random seed | Checkbox |

If selected, the value specified in the Random seed field is applied to the randomizer in order to get reproducible results. Default status: Selected |

| Random seed | String/Expression | Required. if the Use random seed property is selected. Number used as static seed for the randomizer.

Default value: 12345 The result is different if the values specified in Maximum memory % or the JCC memory are different. |

| Maximum memory % | String/Expression | Required. The maximum portion of the node's memory, as a percentage, that can be utilized to buffer the incoming dataset. If this percentage is exceeded then the dataset is written to a temporary local file and then the sample generated from this temporary file. This configuration is useful in handling large datasets without over-utilization of the node memory. The minimum default memory to be used by the Snap is set at 100 MB.

Default value: 10 |

| Snap execution | Dropdown list |

Select one of the three modes in which the Snap executes.

Available options are:

Default value: Execute only Example: Validate & execute |

Temporary files

During execution, data processing on Snaplex nodes occurs principally in-memory as streaming and is unencrypted. When processing larger datasets that exceed the available compute memory, the Snap writes unencrypted pipeline data to local storage to optimize the performance. These temporary files are deleted when the pipeline execution completes. You can configure the temporary data's location in the Global properties table of the Snaplex node properties, which can also help avoid pipeline errors because of the unavailability of space. Learn more about Temporary Folder in Configuration Options.